Facebook Users Can Manipulate Own Emotions With New Google Chrome Extension

Any Facebook users left out of the massive psychological study the social network secretly completed on hundreds of thousands of users can now willingly participate in their own mood study with a Google Chrome plug-in.

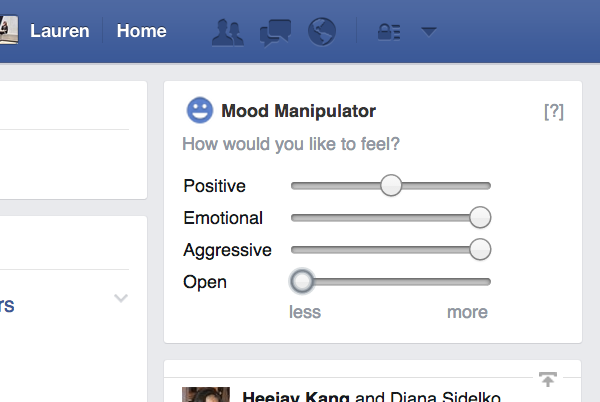

The “Facebook Mood Manipulator” is available for free on Chrome web store, giving users the power to control the way they feel by customizing which of their friends’ posts appear on their news feed.

The extension lets Facebook users determine if how positive, emotional, aggressive, or open they want to feel by scrolling feeling toward “more” or “less.” Sliding the “aggressive” scale toward “more,” for example, would curate the news feed to the point where it’s full of politically opinionated posts or acerbic statuses complaining about customer service. Using the “emotional” option, however, would increase the number of wedding rings, birthday cakes, and pictures of children, for instance.

Lauren McCarthy, a New York City-based artist and programmer, created the plug-in in response to the news last week that Facebook sought to manipulate 700,000 users’ emotions by filling their news feed with either negative or positive emotions. The results, which nominally showed that emotions can transfer via social media, were published in the Proceedings of the National Academy of Sciences.

“Why should Zuckerberg get to decide how you feel? Take back control,” McCarthy wrote on her site. “Aw yes, we are all freaked about the ethics of the Facebook study. And then what? What implications does this finding have for what we might do with our technologies? What would you do with an interface to your emotions?”

And, by all accounts, the extension really works. The algorithm is based on the Linguistic Word Count, the same system that the Facebook study relied on to evaluate the context and phrases in the 700,000 user’s Facebook posts.

“You adjust the settings and it’s not immediately clear what’s changed,” McCarthy told the Daily Mail. “However you slowly realize that these posts do have an effect on you…you can set this button, totally forget about it, and have a totally different experience on Facebook that’s not explicitly clear to you but is implicitly affecting you.”

Facebook has come under fire from U.K. regulators for the experiment and the Electronic Privacy Information Center filed a formal complaint Thursday with the U.S. Federal Trade Commission.

© Copyright IBTimes 2024. All rights reserved.