Google Algorithm 'Failed Spectacularly' During Las Vegas Shooting, Google News Creator Says

The man who worked for more than 15 years to create Google News for the search giant says he’s disturbed and frustrated by the fake news search results, in the form of 4chan threads, following the Las Vegas shooting. Krishna Bharat thinks the problem is much deeper.

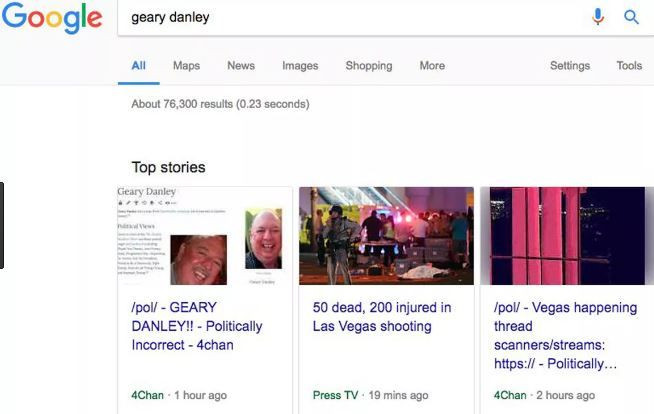

Speaking with Wired, Bharat responded to Google News featuring 4chan hoax threads as the “Top Stories” during and after the Oct. 1 Las Vegas shooting that left 59 people dead. Bharat said the company’s reliance on its algorithm “failed spectacularly” in promoting the incendiary forum’s posts wrongly identifying Geary Danley as the shooter.

“They had this belief that the algorithm would take care of it, but it failed in [this] case spectacularly,” says Krishna Bharat, who worked at Google for 15 years and created the Google News feature, before leaving the company in 2015 to advise startups. “In a way it’s a very small problem but it hints at a deeper problem in terms of philosophy.”

Dear Google, 4chan in Top Stories is a mistake. Please revert to showing vetted sources https://t.co/S3j0r0w2Yi

— Krishna Bharat (@krishnabharat) October 5, 2017

The “Top Stories” feature often appears at the top of most Google search results directly related to the terms typed into the search. And while in most cases this produces highly relevant stories from reputed news sources, this can often lead to misleading content when there is very little known about a developing story. In the case of the misidentified Las Vegas shooter, searches for Geary Danley started flooding in that led to the false 4chan threads.

Bharat says Google dropped their safeguards only recently, formerly having vetted only vetted news sites appear while blocking out any site that had previously been known to promote fake news. He expressed deep concern for Google’s “abuse” of the Top Stories feature, and said the company owes this trust to both the public and its own brand.

But the Google News team vetting was pulled around four years ago, expanding news results from a selection of sources to any and all sources. Publications such as InfoWars, which promote conspiracy theories and often outright lies as facts, now rank highly in the algorithm.

“If a source was caught faking the news it was out,” Bharat tells Wired. ”It had the effect of creating a range of voices - where the quality varied quite a bit but there weren’t hoaxes…There’s a larger community of engineers who have a sense for how information should be ranked and they don’t treat news a special case – and that is a problem.”

Bharat says Google can’t be blamed for the existence or enabling of racist, bigoted people. However, the promotion of non-vetted news allows legitimacy to be given to extremists and trolls. At the end of the day, Bharat says, Google needs to step up to their responsibility.

“It’s time for them to take things seriously,” Bharat says.

© Copyright IBTimes 2025. All rights reserved.