Humans vs. AI: The History And Future Of Artificial Intelligence

Man always has been haunted, and simultaneously thrilled, by the idea we are not alone. Long before computers were invented, monsters like Frankenstein and Jewish golem folklore normalized the fantasy of artificial intelligence. Today, companies like Google, Facebook and Microsoft, along with Chinese tech giants like Baidu, are investing billions of dollars in AI. International Data Corp. estimates AI solutions are now a $12.5 billion industry, expected to rake in $46 billion in annual revenues by 2020. But this technological progress comes with complex risks.

Scientists estimate AI-powered robots could replace a significant number of employees in a wide variety of industries by the next century. Adjunct computer science professor at Stanford and former Baidu scientist Andrew Ng told International Business Times he expects human radiologists and drivers will be out of a job in half that time. “I find it hard to think of an industry that AI will not transform in the next decade,” Ng said. “There is going to be extensive competition between AI and humans for jobs.”

Read: Artificial Intelligence News: Could AI Offer Therapy, Mental Health Services?

AI-powered technologies already run the world, from GPS navigating apps to financial trading. “After it becomes commonplace, nobody thinks about it as AI anymore,” Ng said. “AI is the new electricity. ... No one thinks of your TV as an electric-powered TV.”

Gaurav Chakravorty, head of data science at the investment firm QPlum, already has noticed Wall Street investors like BlackRock laying off employees en masse and switching to AI-powered robots.

“Nowadays, there’s so much data that star traders, even the best of the best, are losing money,” Chakravorty told IBT. “Thousands of people, globally, now use AI-powered trading.” Right now, AI models are better at handling big data, yet can’t consider external factors. For example, Uber’s current reputation crisis. Chakravorty estimates it will take another decade until robot traders can consider all the same complexities human traders do.

By that time, Chakravorty said AI-powered fintech probably will be making financial decisions for people, saving clients time and stress. This also will make personalized financial consulting accessible to people who can’t afford human advisers. “More than $1 trillion is spent every year for financial services,” Chakravorty said. “People will say, I don’t need to pay that much.”

Meanwhile, all those mortgage consultants and traders who are out of work will need new skills. Ng, who was previously the vice president and chief scientist of Baidu, said education programs and wage regulation could help reorient an economy run by machines. However, there are other ethical dilemmas, like sentience and emotional intelligence, which are much harder to postulate.

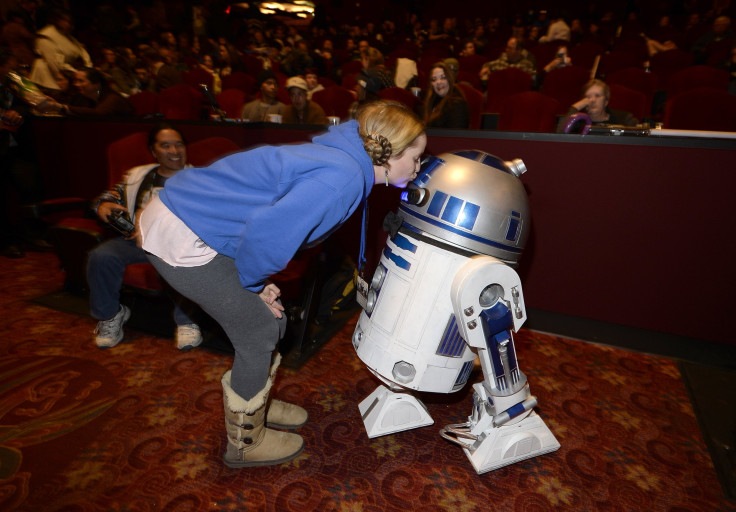

“Narrow AI is models that have been trained on very specific data sets, like cancer cells,” PubNub developer Lamtharn Hantrakul told IBT. “R2-D2 is what we’re all trying to work toward.” PubNub, a data stream network, is pioneering a crucial aspect of artificial intelligence: group think.

Read: Is AI Inherently Sexist? New Virtual Assistant Alice Uses AI To Help Women Entrepreneurs

Unlike human minds, computer processors can work independently and as a group at the same time. PubNub makes those signals much faster. A road full of self-driving cars will need this kind of real-time cloud communication to coordinate traffic safely. The best robot driver possible would incorporate all the available data across many organizations, instead of companies siloing data for their own gain. That’s one way human nature limits AI today. Data sharing is a highly political struggle, to say the least.

R2-D2 would also need a similarly fast and reliable connection to the cloud to be an omniscient sidekick instead of just a machine. Next comes personality, which is a battlefield all its own. There are plenty of AI chatbots, virtual girlfriends and even old school virtual pets like Tamagachis making machines friendlier. At the same time, AI tools give computers emotional intelligence by recognizing emotive language and facial expressions. “People will develop a certain amount of emotional attachment to their devices,” Chakravorty said.

This raises a whole new crop of ethical dilemmas. At what point does chatbot marketing to teens, let’s say promoting makeup to young girls, cross the line between friendly and emotionally manipulative? If you’re best friend is a robot, what rights do you have to the personal data collected by the robot? At what point does emotional attachment to a virtual friend become unhealthy? Will sex robots influence users’ attitudes toward human partners the way some sociologists think sexist video games do? And that’s all assuming these AI robots follow human instructions.

So far, Ng said “automation on steroids” is a better way to think about AI than self-aware androids from "Star Wars." Yet he doesn’t rule out the idea that someday machine learning and AI could lead to a race of sentient robots. That fear permeates popular culture. There are so many movies about malicious robots taking over that it’s practically become its own Hollywood genre.

It’s much easier to navigate the relationship between humans and AI when machines only can serve their creators. What happens when AI power outpaces human control?

The idea of a humanoid clockwork machine with an independent consciousness first surfaced in the 1907 children’s book “Ozma of Oz.” Yet it was the late novelist Isaac Asimov who created the global AI mythos most people now associate with robots, a dangerous relationship between man and machine.

Asimov’s fictional robots confront difficult choices about which human lives they are able to save and how to avoid the majority of human violence. Whether they make the right ethical choice is often left up to the reader. By the time the term “artificial intelligence” was first coined by computer scientist John McCarthy in 1956, sci-fi readers were already wary of the robot-human dynamic. Unlike chirpy little R2-D2, Asimov’s robots can feel in a solipsistic sense.

If the AI robots derive discomfort from incomplete tasks or disorder, and can communicate that feeling as articulately as any human, it’s hard to draw the line between computer programming and authentic emotions like frustration or desire. What psychological power play does the future hold for the evolving relationship between mankind and AI?

Israeli scientist Yuval Harari argued in his book "Homo Deus" that AI could make better personal decisions, like whom to marry, based on data than people can by following intuition. He wrote AI could render humans irrelevant, both economically and politically. If men value AI only for its output and disregard the individual well-being of smart machines, Harari pondered, what is to keep a superior race of AI masters from treating human individuals with the same callousness? Harari warned ethically blind development of AI could lead to the most unequal society in history.

“We often think of technology as culturally neutral,” Hantrakul added. “But technology is very, very culturally biased. ... Algorithms inherently include the biases of the people developing them.”

It’s impossible to say what the future of mankind’s relationship to AI will look like. In the next few years, machine learning tools could boost productivity and give people more time to spend on creativity and relationships. “If we don’t end up in a 'Terminator' scenario,” Hantrakul laughed. “These intelligent agents and machines will allow us to be more human.”

© Copyright IBTimes 2024. All rights reserved.