MIT: Technology Has Not Helped To Lower US Health Care Costs (On The Contrary)

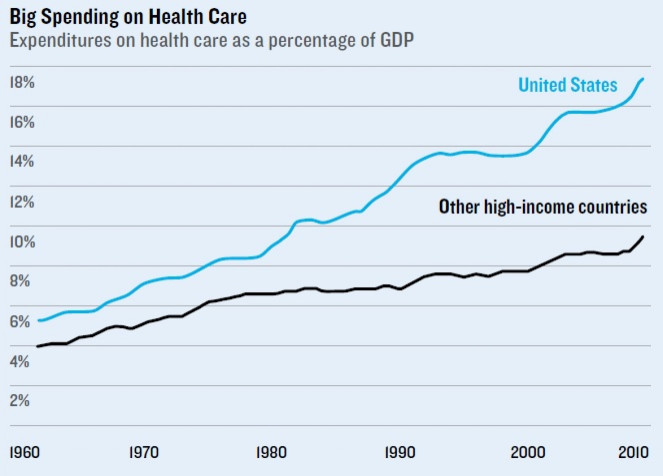

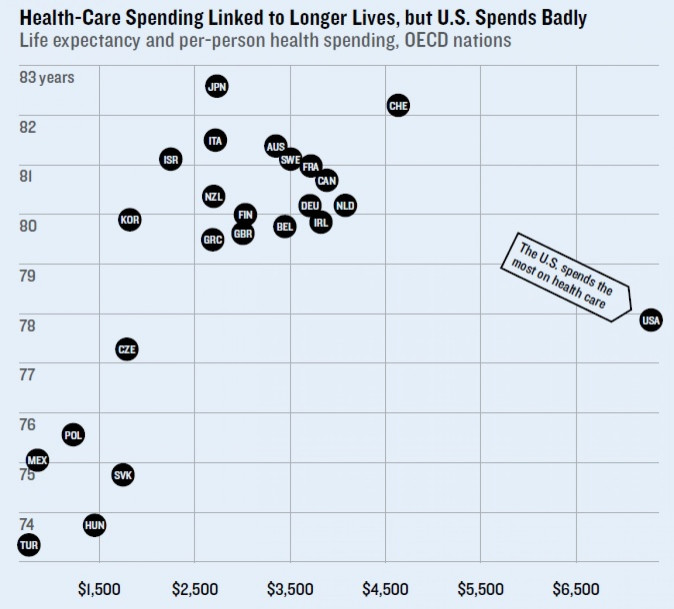

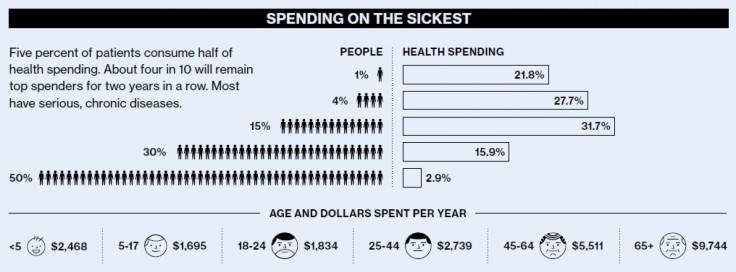

If the high cost of medical treatment in the U.S. continues on its current trajectory, health care spending will make up a third of the U.S. economy and devour 30 percent of the federal budget by the year 2038.

And with five of the seven top lobbying groups in Washington, D.C., run by health care providers, insurance companies and drug companies, the idea that the country is anywhere near solving the issue of America’s obscenely high medical bills is a pipe dream.

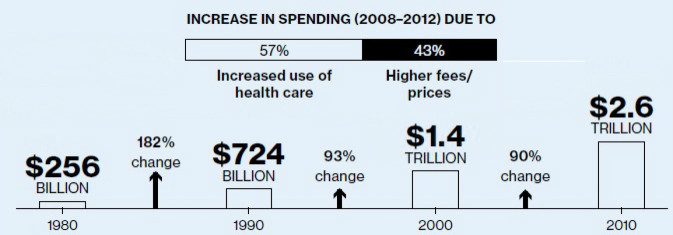

Whether the Affordable Care Act (aka Obamacare) puts a check on the alarming rise in the cost of treatment, it’s going to take years to bring costs back from the $2.6 trillion spent in 2010, which was nearly double the $1.3 trillion spent a decade earlier and 259 percent more than what Americans spent in 1990.

The issue looms so large that tackling it is the country’s “challenge of the 21st century,” said Jonathan Gruber, an MIT economist leading a health care group at the National Bureau of Economic Research, in a new report that says technology is a mixed blessing: It has contributed to the rise of expenses but could be used to lower them if used in the right way.

“Moore’s Law predicts that every two years the cost of computing will fall by half,” writes Antonio Regalado, senior business editor of MIT Technology Review, in the 19-page report. “But in American hospitals and doctors' offices, a very different law holds sway: Every 13 years, spending on health care doubles.”

The new law aims to boost health coverage and lower costs by increasing the number of insured Americans, by imposing outcomes-based rather than fee-based measures for Medicare, and by digitizing medical records to streamline administrative costs. Whether these efforts will be enough has yet to be seen, but one thing is clear: Americans simply can't keep paying so much for health care.

© Copyright IBTimes 2024. All rights reserved.