Elon Musk Warns Of Artificial Intelligence ‘Risk’

This article was originally published on the Motley Fool.

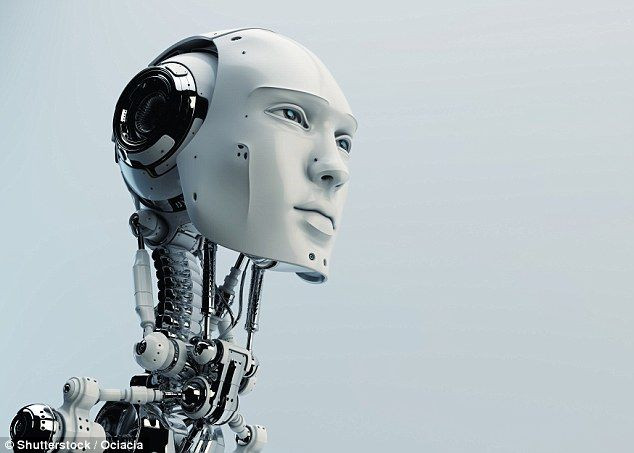

Science fiction has regularly dealt with the idea that artificial intelligence (AI) could lead to humanity's end (or at least its near end). In the Terminator series, a sentient computer system, Skynet, realizes that the best way to keep the world safe (and protect its own existence) is to wipe out mankind. In Battlestar Galactica, robots created as servants slowly outgrow their programming and come to the realization that they, not their human creators, should exist.

It's easy to see why the idea makes for good books and movies. If, for example, artificial intelligence (AI) can beat a human at chess or on Jeopardy!, then it's easy to make the next leap to robots someday being smarter than humans -- and for that to go terribly wrong. That's a leap that's getting easier to make these days since robots and various other AI-powered devices have made their way into reality.

• Motley Fool Issues Rare Triple-Buy Alert

In some cases, they are taking our jobs, while in others, AI handles our cybersecurity or tells us where we might like to eat dinner. Our homes have AI devices that can control our lights, alarm systems, and pretty much anything else.

We may not be living in a post-apocalyptic hellhole where mankind tries to avoid being wiped out by well-armed robots and cyborgs, but our world looks like the opening scene in that type of movie. What once seemed far-fetched has become plausible, and Tesla (NASDAQ:TSLA) CEO Elon Musk believes we need to plan for it.

What did Musk say?

Without pulling any punches, Musk recently told the National Governors Association Summer Meeting that he has seen the most cutting-edge AI technology and believes that people should be "really concerned about it." Coming from most people -- nearly anyone else -- these comments would seem a tad paranoid. Coming from the person behind Tesla, SpaceX, and Hyperloop, they sound credible even though Musk is talking like the guy in a movie whose warning people should heed, but don't.

"... until people see robots going on the street killing people, they don't know how to react because it seems so ethereal," he said. "We should all be really concerned about AI."

• This Stock Could Be Like Buying Amazon in 1997

The technology pioneer believes that AI is the rare case where government should be proactive instead of reactive in regulation. It won't work to wait until people are complaining.

"AI is a fundamental risk to human civilization in a way that car accidents, airplane crashes, faulty drugs, or bad food were not," he said. "They were harmful to a set of individuals, but not harmful to society as a whole."

Musk believes that the race to build the next-best-thing in AI will lead to companies cutting corners. Without regulation, he expects that to lead to a problem that could cause potential challenges to ongoing human existence. He, of course, did not mention Terminator, Battlestar Galactica, or any other sci-fi doomsday scenario, but he clearly believes one could happen.

Is Musk right?

We already have machines that can advance beyond their programming without human help. It's not an unfathomable leap to think an evolving AI could come to the conclusion that protecting humanity involves enslaving or even exterminating humans.

Musk is right that limits should be placed. In the same way government has forced the self-driving-car movement to move slower than many companies would want, some sensible regulation for AI -- specifically around testing and what we allow artificial intelligence to control -- could forestall, if not eliminate, the possibility of disaster.

• 7 of 8 People Are Clueless About This Trillion-Dollar Market

If we create near-sentient AI devices/robots that both learn and think for themselves, we open up the possibility for catastrophic problems. We should heed Musk's warning and move ahead cautiously.

Daniel Kline has no position in any of the stocks mentioned. The Motley Fool owns shares of and recommends Tesla. The Motley Fool has a disclosure policy.